Students consider how statistics and probability drive AI text generation, learning that language models can produce text that sounds credible but may not actually be credible.

Lesson Goals |

Students will be able to…

|

Student-facing Lesson Goals |

|

Materials |

|

Preparation |

|

🔗Introduction to Statistical Language Modeling

Overview

Students explore how text prediction relies on statistical language modeling.

Launch

While texting, emailing, or even conducting a web search, you’ve probably noticed that your thoughts and sentences are sometimes finished for you! Predictive text is now a common feature of many apps, and it is made possible by a certain form of AI: the statistical language model.

Make sure you’ve made a copy of the survey (Google) and have created a link to share with students, so that you can look at your data as a class!

-

Consider this start of a phrase: "Close the…"

-

Use the form to record the next word that pops into your mind.

-

Type in two other possible next words.

Display the survey results for the class to see. If a word is repeated, summarize the results by keeping a tally of how many students suggest each particular word.

Students will think of words like "door", "box", "microwave", "laptop"…

Investigate

Let’s look a little more closely at the words we generated.

-

Which words were suggested most often?

-

Which words were suggested least often?

-

Are you surprised by the most and least common words? Why or why not?

-

What fraction of our class proposed each of our top three words?

-

For example: If there are 25 students in your class, and 8 students chose “door”, it was chosen 8/25ths of the time.

Statistical language models are built on the assumption that the fraction of the time that a word follows a series of words in a training corpus can help predict the likelihood of it being the right word to follow that same series of words in a future scenario. In this lesson, we will refer to that fraction as its probability.

-

Do you think a text messaging app with a predictive text feature would produce the same results as our class? Why or why not?

-

Answers will vary. Invite conversation.

-

An app will probably not suggest all the same words.

-

Our class is a pretty small sample, all from the same generation and geography and we are all currently at school so our minds are primed to respond differently than if we were somewhere else.

Similar to other AI systems we’ve discussed, predictive text features are trained on a large amount of data, in this case, text.

During training:

-

The computer extracts each use of the phrase "Close the" from the training dataset, along with the subsequent word.

-

It then determines the probability of each possible next word.

When making a suggestion:

When making a suggestion:

It offers the words that had the highest probability of following "Close the" in the training dataset.

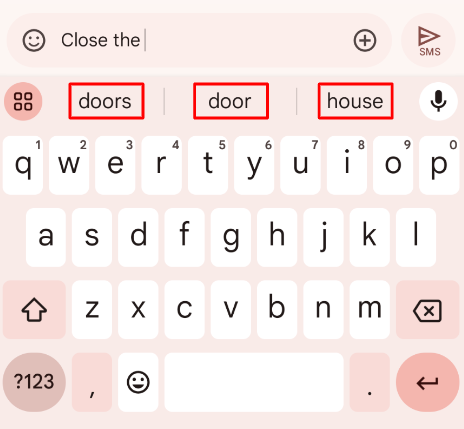

Consider this screenshot from a text messaging app.

In the training corpus it was built on, "door", "doors", and "house" most commonly appeared after "Close the".

As a result, those words are suggested.

But are all users recommended the same completions?

-

What do you think? Will your phone suggest the same words as your classmates' phones would? Why or why not?

-

Before we get into the in-depth student-facing explanation, below, invite discussion!

-

This question is a great opportunity to revisit previous concepts, in particular the process of training and sampling that students discussed when thinking about plagiarism detection.

For a variety of reasons, not all phones will propose the same completions:

-

There might be multiple words with equal probability, in which case the system must use some method of random selection. As a result, even the same phone won’t necessarily propose the same word every time.

-

Not all phones use the same system. Systems are generally built with at least a little bit of randomness; the amount of randomness varies from system to system.

-

A messaging app also includes a model of the text that the actual user composes. The app therefore offers a degree of personalization based on what the user has chosen in the past. Similarly, the app can take into account the word suggestions that you have previously accepted.

-

Some predictive text features even take into account the specific message that you are composing! For instance, typing first letter of an unusual word that you used in the same message triggers the app to propose that unusual word.

You have just considered the workings and in-context use of a statistical language model. Hopefully you have discovered that, although it sometimes may seem like your texting app can read your mind… it can’t. It doesn’t know the rules of grammar, the meanings of words, or your intentions when you are composing a text. It just knows probability, which it uses in ways that are often very impressive (but sometimes not!).

Throughout the lesson, we’ll explore the very important "sometimes not" parenthetical, above.

Synthesize

-

Might statistical language modeling be possible for other spoken human languages? Which languages?

-

Statistical language modeling will work for any language! The AI does not need to "know" anything about the rules of grammar; it just follows rules that enable it to identify patterns.

-

Can you think of other things besides human spoken languages that a similar approach might work for?

-

With statistical language modeling, AI can compose music, play chess games, and more. The "text" does not need to be made up of words: any symbolic notation at all will do as long as it uses spaces to separate the symbolic "words".

🔗Constructing a Statistical Language Model

Overview

Students construct a statistical language model by decomposing the text and computing the probabilities of different words following each other.

Launch

The best way to make sense of statistical language modeling is to try it yourself! We’ll start by constructing a model.

For our corpus, we will use the folk song There Was an Old Lady Who Swallowed a Fly, which tells the nonsensical story of an old lady who swallows a fly, and the unfortunate series of events that follow.

First, we will decompose the title of our corpus into differently sized chunks (one word at a time, two words at a time, etc.):

| chunk size | Quantity | Decomposition |

|---|---|---|

1 word |

9 |

(There) (Was) (an) (Old) (Lady) (Who) (Swallowed) (a) (Fly) |

2 words |

8 |

(There Was) (Was an) (an Old) (Old Lady) (Lady Who) (Who Swallowed) (Swallowed a) (a Fly) |

3 words |

7 |

(There Was an) (Was an Old) (an Old Lady) (Old Lady Who) (Lady Who Swallowed) (Who Swallowed a) (Swallowed a Fly) |

The formal word computer scientists use in this context is not "chunk" but n-gram. In an n-gram, n represents the number of words in the chunk. For special cases where n is 1, 2, or 3, the n-grams are called unigrams, bigrams, and trigrams.

-

What other collections of words have you encountered that begin with "uni", "bi", and "tri"?

-

Answers will vary!

-

unicycle, bicycle, tricycle

-

(monomial), binomial, trinomial…

-

triangle, n-gon

-

biceps, triceps, (quadriceps)

-

unicorn

Investigate

Let’s dig a little deeper…

Share the song lyrics with students to read independently. If desired, you could also listen to a recorded version of the song.

The phrase "there was an old lady who swallowed a…" is repeated in our corpus! Let’s zoom in on one unigram from that phrase: “there”.

-

Referring to the corpus (the lyrics and the title: how many times does the word "there" appear in the song?

-

5

-

In this corpus, how many times was the word "there" followed by the word "was"?

-

5

-

What is the probability that the word "there" is followed by the word "was"?

-

5/5 or 100%

In the example you just worked through, you computed the probability that "was" appears after the unigram "there"…

by dividing 5 (how many times we see "there" followed by "was") by 5 (how many times we see "there" followed by anything).

We can represent this computation with a special notation:

p(was | there) = $$\displaystyle \frac {\textit{count(there was)}} {\textit{count(there…)}} = {\frac{5}{5}}$$

-

Complete Constructing a Language Model.

-

What training corpus did we use to construct the language model?

-

The song lyrics, including the title of the song, were our corpus.

-

Make a prediction: How can we make use of the ratios we completed on Constructing a Language Model?

-

We can refer to our ratios to determine which word is the most likely to follow a given word.

Are you and your students interested in exploring probability in more depth? Check out our lesson on Probability, Inference, and Sample Size to dig deeper.

Synthesize

In our song corpus,

-

there were four possible completions for the unigram "the"

-

there were only three possible completions for the 3-gram "to catch the"

We can say that, in this corpus, as the n-gram gets longer, the number of completion options decreases.

-

Do you think it will generally be true of other corpuses that as the n-gram gets longer, the number of completion options decreases?

-

Yes, in general, this is a true statement: longer phrases have fewer possible completions than single words.

🔗Sampling from the Model

Overview

Students use their statistical language model in a generative way, to produce output.

Launch

Having built a language model, what can we do with it? We can use it in a generative way: we can produce output!

How might we go about doing that?

-

We can start by choosing our first word. A common approach is to ask, "What’s the most common n-gram in the corpus?" but we can also choose the starting word on our own, if we want.

-

Next, we ask: "Given the first n-gram, what is the most common successor?"

-

We repeat this second step forever! …or, more realistically, until we decide to stop the program. A simple statistical language model, however, will generate text ad infinitum.

Investigate

Let’s give this process a try, returning to our "Old Lady" corpus.

Note that on Sampling from the Language Model, questions build on one another. In other words, a student who misses Question 2 will also get Question 3 incorrect. To ensure that class runs smoothly, we encourage you to require that students check their work with a partner before moving from one question to the next. Encouraging students to annotate There Was an Old Lady Who Swallowed a Fly will also result in more accurate counting and therefore more correct responses!

-

Complete the first section of Sampling from the Language Model using There Was an Old Lady Who Swallowed a Fly.

-

Tip: Annotate your There Was an Old Lady Who Swallowed a Fly! Highlighters and different colors of ink can help you to stay organized.

The two questions below are on students' worksheets, but merit follow-up and discussion.

-

What four-word phrase did you generate?

-

"She swallowed a fly"

-

Did everyone in your class end up with same phrase? How and why did that happen?

-

Yes. When considering which word to generate next, there was always one word that was clearly the most probable, and there were no ties.

-

Complete the second section of Sampling from the Language Model.

-

What four-word phrase did you generate for Text Generation 2a?

-

The class should be split between "the spider to catch" and "the spider that wriggled".

-

Why didn’t everyone end up with the same phrase?

-

We were forced to incorporate randomness when there was a tie for the most probable word to follow "spider".

Modern statistical language models often invite users to adjust the temperature of the generated text, which influences the level of randomness. For instance, ChatGPT users are encouraged to use a low temperature for more focused and less creative tasks. They are encouraged to use a higher temperature for more random and increasingly creative tasks.

Temperature is the parameter that controls the randomness of the model’s output as it generates text.

Even without the ability to raise the temperature, we encountered randomness and variability in our generated texts. With a large enough corpus and a high enough temperature, a statistical language model will produce a new and unique output every single time!

AI "Hallucinations"

As generative AI produces text, it often generates incorrect or misleading information. This is commonly known as an AI "hallucination".

Some experts dislike this term and are encouraging an end to its use. These experts argue that all output is "hallucinatory". Some of it happens to match reality… and some does not.

The very same process that generates "hallucinatory" text also generates the "non-hallucinatory" text. This truth helps us to understand why it is so difficult to fix the "hallucination" problem.

This term also attributes intent and consciousness to the AI, giving it human qualities when it is merely executing a program exactly as it is intended to do.

Synthesize

Critics of ChatGPT and other language models raise a variety of concerns. Consider each of them, below.

-

ChatGPT sometimes "makes stuff up." Why does this happen? What is actually going on?

-

When ChatGPT produces false or misleading information, it is not glitching nor is there a bug. ChatGPT is just doing what it does, following the model as it ought to.

-

ChatGPT has biases that can be seen in its text output. Where do these biases come from?

-

If there are biases in the corpus, there will likely be biases in the output!

These materials were developed partly through support of the National Science Foundation, (awards 1042210, 1535276, 1648684, 1738598, 2031479, and 1501927).  Bootstrap by the Bootstrap Community is licensed under a Creative Commons 4.0 Unported License. This license does not grant permission to run training or professional development. Offering training or professional development with materials substantially derived from Bootstrap must be approved in writing by a Bootstrap Director. Permissions beyond the scope of this license, such as to run training, may be available by contacting contact@BootstrapWorld.org.

Bootstrap by the Bootstrap Community is licensed under a Creative Commons 4.0 Unported License. This license does not grant permission to run training or professional development. Offering training or professional development with materials substantially derived from Bootstrap must be approved in writing by a Bootstrap Director. Permissions beyond the scope of this license, such as to run training, may be available by contacting contact@BootstrapWorld.org.